Using INSERT INTO .. SELECT to Bulk Load Data with Minimal Logging

You can use INSERT INTO

- The recovery model of the database is set to simple or bulk-logged.

- The target table is an empty or nonempty heap.

- The target table is not used in replication.

- The TABLOCK hint is specified for the target table.

BOL also describes the logging behaviour of SELECT ... INTO clause:

The amount of logging for SELECT...INTO depends on the recovery model in effect for the database. Under the simple recovery model or bulk-logged recovery model, bulk operations are minimally logged. With minimal logging, using the SELECT... INTO statement can be more efficient than creating a table and then populating the table with an INSERT statement.

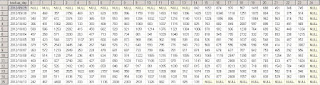

Seeing is believing. So I planed several test cases in my SQL Express 2012 instance. In these test cases, I used different methods to populate 1M rows from an existing table called big_table to a new table called my_table.

Test case 1 using "SELECT ... INTO"

use dbadmin go drop table my_table; go DBCC SHRINKFILE (N'DBAdmin_log' , 0, TRUNCATEONLY) go declare @size_s float declare @size_e float SELECT @size_s = cntr_value FROM sys.dm_os_performance_counters WHERE counter_name = 'Log File(s) Used Size (KB)' AND instance_name = 'DBAdmin' print 'start : ' + str(@size_s) select b.scattered_data, b.vc_small, b.id into my_table from big_table b SELECT @size_e = cntr_value FROM sys.dm_os_performance_counters WHERE counter_name = 'Log File(s) Used Size (KB)' AND instance_name = 'DBAdmin' print 'end : ' + str(@size_e) PRINT 'size used (KB) =' + convert(varchar(30), @size_e - @size_s)I configured the database DBADMIN to different recovery mode and run the above code, here are the output

/* test run 1 - simple (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator. start : 428 (1000000 row(s) affected) end : 996 size used (KB) =568 */ /* test run 2 - bulk-logged (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator. start : 450 (1000000 row(s) affected) end : 1078 size used (KB) =628 */ /* test run 3 - full (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator. start : 432 (1000000 row(s) affected) end : 33276 size used (KB) =32844 */It can be seen that with Simple or Bulk-logged mode, the transaction log used only 500-600 KB, while with Full recovery mode it is about 32 MB. After that, I have tested "INSERT INTO ... SELECT" with different recovery mode and also check the effect of 'TABLOCK' hint

Test case 2 using "INSERT INTO ... SELECT"

use dbadmin go drop table my_table; go create table my_table( A int, B varchar(10), C int ); DBCC SHRINKFILE (N'DBAdmin_log' , 0, TRUNCATEONLY) go declare @size_s float declare @size_e float SELECT @size_s = cntr_value FROM sys.dm_os_performance_counters WHERE counter_name = 'Log File(s) Used Size (KB)' AND instance_name = 'DBAdmin' print 'start : ' + str(@size_s) -- insert into my_table (A,B,C) insert into my_table with (TABLOCK) (A,B,C) select b.scattered_data, b.vc_small, b.id from big_table b SELECT @size_e = cntr_value FROM sys.dm_os_performance_counters WHERE counter_name = 'Log File(s) Used Size (KB)' AND instance_name = 'DBAdmin' print 'end : ' + str(@size_e) PRINT 'size used (KB) =' + convert(varchar(30), @size_e - @size_s)Test results:

/* test run 1 - Simple (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator. start : 450 (1000000 row(s) affected) end : 1009 size used (KB) =559 */ /* test run 2 - Bulk-logged (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator. start : 450 (1000000 row(s) affected) end : 1017 size used (KB) =567 */ /* test run 3 -- Full (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator. start : 450 (1000000 row(s) affected) end : 33408 size used (KB) =32958 */ /* test run 4 -- Simple but without TABLOCK hint (Full, Bulk-logged have similar values) (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator. start : 428 (1000000 row(s) affected) end : 125834 size used (KB) =125406 */In the first three test run, I used the "TABLOCK" hint. It can be seen that in terms of the amount of transaction log used, using "INSERT INTO ... SELECT" with "TABLOCK" hint is essentially same as using "SELECT ... INTO" regardless of recovery mode. Without the "TABLOCK" hint, regardless of the recovery mode, using "INSERT INTO ... SELECT" will generate same amount of transaction log and 4 times more than that in the case of FULL recovery mode with the hint ( i.e. 125406 KB vs 32958 KB in my test case). People think "INSERT INTO ... SELECT" is slow may be because they don't always remember to add this hint when appropriate.