Thursday, July 27, 2006

lz0-031- Question to be reviewed again

Drop a column.

- You need first mark column as UNUSED with

SQL> ALTER TABLE table_name SET UNUSED COLUMN column_name.

- And only after that you can drop this column from the table with

SQL> ALTER TABLE table_name DPOP UNUSED COLUMNS

Dropping a Column: Example

This statement illustrates the drop_column_clause with CASCADE CONSTRAINTS. Assume table t1 is created as follows:CREATE TABLE t1 (

pk NUMBER PRIMARY KEY,

fk NUMBER,

c1 NUMBER,

c2 NUMBER,

CONSTRAINT ri FOREIGN KEY (fk) REFERENCES t1,

CONSTRAINT ck1 CHECK (pk > 0 and c1 > 0),

CONSTRAINT ck2 CHECK (c2 > 0)

);

An error will be returned for the following statements:/* The next two statements return errors:

ALTER TABLE t1 DROP (pk); -- pk is a parent key

ALTER TABLE t1 DROP (c1); -- c1 is referenced by multicolumn

-- constraint ck1

Submitting the following statement drops column pk, the primary key constraint, the foreign key constraint, ri, and the check constraint, ck1:ALTER TABLE t1 DROP (pk) CASCADE CONSTRAINTS;

(note: you can do:

??> how to add a table column?

----------------------------------------------------------------

QUESTION 38

For a tablespace created with automatic segment-space management, where is free space managed?

A. In the extent

B. In the control file

C. In the data dictionary

D. In the undo tablespace

Answer: D

Free space is managed in the undo tablespace for a tablespace created with automatic segment-

DS: How is it mananged?

-----------------------

QUESTION 41

After running the ANALYZE INDEX orders cust_idx VALIDATE STRUCTURE command, you query the

INDEX_STATS

view and discover that there is a high ratio of DEL_LF_ROWS to LF_ROWS values for this index.

You decide to reorganize the index to free up the extra space, but the space should remain allocated to the

ORDERS_CUST_IDX index so that it can be reused by new entries inserted into the index.

Which command(s) allows you to perform this task with the minimum impact to any users who run queries that

need to access this index while the index is reorganized?

A. ALTER INDEX REBUILD

B. ALTER INDEX COALESCE

C. ALTER INDEX DEALLOCATE UNUSED

D. DROP INDEX followed by CREATE INDEX

Answer: B

To perform this task you need to use the ALTER INDEX COALESCE command to avoid overhead on the users

who run queries that

need access this index while the index is reorganized.

Incorrect Answers

A: The ALTER INDEX REBUILD command is used to correct index staleness. In order to rebuild the index,

Oracle places a SDML lock on the base table whose index is about to be rebuilt. During the time Oracle holds

this DML lock, you cannot make any changes to data in the base table. You can also use a less-restrictive

locking mechanisms. It requires to use keyword ONLINE in the ALTER INDEX REBUILD command.

C: The ALTER INDEX DEALLOCATE UNUSED can cause an impact to users who run queries.

D: You cannot drop and than recreate index because of heavy index usage by users.

-------------------------------------------

QUESTION 58

Which type of table is usually created to enable the building of scalable applications, and is useful for large

tables that can be queried or manipulated using several processes concurrently?

A. Regular table

B. Clustered table

C. Partitioned table

D. Index-organized table

Answer: C

Partitioned tables can be queried or manipulated using several processes concurrently. This type of tables is

very

helpful to build scalable applications with large tables.

Incorrect Answers

A: Regular tables can be also queried or manipulated using parallel processing, but the gain of partitioned

tables is more significant.

B: Clustered tables cannot be manipulated using several processes concurrently because they contain some

blocks as a common part for two or more tables. Also there is no sense to build clusters on the large tables with

high level of inserts, updates and deletes.

D: Index-organized tables are particularly useful for data that is mostly retrieved based on primary key. They

are not good source for parallel data processing.

-----------------------------------------------------------------------

QUESTION 74

You set the value of the OS_AUTHENT_PREFIX initialization parameter to OPS$ and created a user account

by issuing this SQL statement:

CREATE USER OPS$smith

IDENTIFIED EXTERNALLY;

Which two statements are true? (Choose two.)

A. Oracle server assigns the DEFAULT profile to the user.

B. You can specify the PASSWORD EXPIRE clause for an external user account.

C. The user does not require CREATE SESSION system privilege to connect to the database.

1Z0-031

D. If you query the DBA_USERS data dictionary view the USERNAME column will contain the value SMITH.

E. The user account is maintained by Oracle, but password administration and user authentication are performed

by the operating

system or a network service.

Answer: A, E

If you set the value of the OS_AUTHENT_PREFIX initialization parameter to OPS$ Oracle server assigns the

DEFAULT profile to the user defined externally. Also the user account is maintained by Oracle, but password

administration and user authentication are performed by the operating system or a network service, not Oracle.

A user defined externally does not require CREATE SESSION system privilege to connect to the database. The

DBA_USERS data

dictionary will show in the USERNAME column value SMITH, not OPS$SMITH.

Incorrect Answers

B: You cannot specify the PASSWORD EXPIRE clause for an external user account.

C: The user requires CREATE SESSION system privilege to connect to the database as any other database user.

D: The DBA_USERS will show OPS$SMITH value in the USERNAME column, not SMITH.

OCA Oracle 9i Associate DBA Certification Exam Guide, Jason Couchman, p. 807-810

Chapter 15: Managing Database Users

----------------------

QUESTION 75

Which two statements about segments are true? (Choose two.)

A. Each table in a cluster has its own segment.

B. Each partition in a partitioned table is a segment.

C. All data in a table segment must be stored in one tablespace.

D. If a table has three indexes only one segment is used for all indexes.

E. A segment is created when an extent is created, extended, or altered.

F. A nested table of a column within a table uses the parent table segment.

Answer: B, C

Each partition in a partitioned table is a segment. All data in a table segment must be stored in one tablespace.

Incorrect Answers

A: Each table in a cluster does not have its own segment. Clustered tables contain some blocks as a common

part for two

or more tables. Clusters enable you to store data from several tables inside a single segment so users can

retrieve data from those two tables together very quickly.

D: It can be some index segments for the one table.

E: A segment is created when an extent is created, not when extent is extended or altered.

F: A nested table of a column within a table does not use the parent table segment: it has its own.

--------------------------------

QUESTION 77

Examine the command:

CREATE TABLE employee

( employee_id NUMBER CONSTRAINT employee_empid_pk

PRIMARY KEY,

employee_name VARCNAR2(30),

manager_id NUMBER CONSTRAINT employee_mgrid_fk

REFERENCES employee (employee_id));

The EMP table contains self referential integrity requiring all NOT NULL values inserted in the

MANAGER_ID column to

exist in the EMPLOYEE_ID column.

Which view or combination of views is required to return the name of the foreign key constraint and the

referenced primary

key?

A. DBA_TABLES only

B. DBA_CONSTRAINTS only

C. DBA_TABS_COLUMNS only

D. DBA_CONS_COLUMNS only

E. DBA_TABLES and DBA_CONSTRAINTS

F. DBA_TABLES and DBA_CONS_COLUMNS

Answer: B

The DBA_CONSTRAINTS view is required to return the name of the foreign key constraint and the referenced

primary key. The

CONSTRAINT_NAME column provides the name of the foreign key constraint. And the R_

CONSTRAINT_NAME shows the

referenced primary key.

Incorrect Answers

A: The DBA_TABLES dictionary view is used to provide only information about tables, not constraints.

C: The DBA_TABS_COLUMNS dictionary view shows information only about columns in the tables.

D: This view lists detailed information about every column associated with a constraint, but it does not return

the name of the referenced primary key.

E: You don't need to use DBA_TABLES dictionary view to retrieve this information.

F: You don't need to use DBA_TABLES and DBA_CONS_COLUMNS data dictionary views to retrieve this

information.

---------------

QUESTION 146

Your company hired Joe, a DBA who will be working from home. Joe needs to have the ability to start the

database remotely.

You created a password file for your database and set REMOTE_LOGIN_PASSWORDFILE = EXCLUSIVE

in the parameter file.

Which command adds Joe to the password file, allowing him remote DBA access?

A. GRANT DBA TO JOE;

B. GRANT SYSDBA TO JOE;

C.GRANT RESOURCE TO JOE;

D. orapwd file=orapwdPROD user=JOE password=DBA

Answer: B

The GRANT SYSDBA TO JOE command will add Joe to the password file, allowing him remote DBA access

when the

REMOTE_LOGIN_PASSWORDFILE is set to EXCLUSIVE in the parameter file.

Incorrect Answers

A: With this command Joe will be granted DBA role, but the password file needs to be edited to allow remote

access for the user to the database.

C: This command just grants RESOURCE role to the user. It will not provide remote access for the user.

D: Joe can be added to the password file using ORAPWD utility to open the remote access for the user if

REMOTE_LOGIN_PASSWORDFILE is set to SHARED.

OCA Oracle 9i Associate DBA Certification Exam Guide, Jason Couchman, p. 509-516

Chapter 10: Basics of the Oracle Database Architecture

----------------

QUESTION 148

Temporary tablespaces should be locally managed and the uniform size should be a multiple of the ________.

1Z0-031

A. DB_BLOCK_SIZE

B. DB_CACHE_SIZE

C. SORT_AREA_SIZE

D. Operating system block size

Answer: C

It's requirement that the uniform size of the temporary tablespace should be a multiple of the

SORT_AREA_SIZE

initialization parameter. Because, by the definition of a disk sort, the data written to disk will equal

SORT_AREA_SIZE, your extents must be at least that large. Size your initial sort segment according to the

formula num X SORT_AREA_SIZE + DB_BLOCK_SIZE, where num is a small number of your choice used

as multiplier of SORT_AREA_SIZE.

Incorrect Answers

A: The uniform size should be a multiple of the SORT_AREA_SIZE, not the DB_BLOCK_SIZE.

B: The uniform size has nothing to do with DB_CACHE_SIZE. DB_CACHE_SIZE specifies the size of the

DEFAULT buffer pool for buffers with the primary block size (the block size defined by the

DB_BLOCK_SIZE parameter).

D: It is not related with operating system block size either.

--------------

QUESTION 158

You are trying to determine how many disk sorts are happening on the database right now. Which of the

following dictionary tables would you use to find that information?

A. V$SESSION

B. V$SYSSTAT

C. DBA_SEGMENTS

D. V$SORT_USAGE

Answer: D

The V$SORT_USAGE view shows the sessions that are using sort segments in your database. Although you

may want to join that data in V$SESSION to see the username corresponding with the session, this view by

itself gives no indication about current disk sorts. V$SESSTAT or DBA_SEGMENTS do not either,

eliminating those choices as well.

-----------------------------------

QUESTION 159

When determining the number of UNDO segments in a database, which of the following choices identifies a

factor to consider?

A. Size of typical transactions

B. Concurrent transactions

C. Size of rows in table most frequently changed

D. Number of anticipated disk sorts

Answer: B

The number of concurrent transactions is used in part to determine the number of UNDO segments your

database should have. Had the question asked for which choice played a role in determining the size of extents

or total UNDO segment size, then size of typical transactions or concurrent transactions would have been

correct. Because disk sorts have little impact on UNDO segments, under no circumstances should you have

chosen number of anticipated disk sorts.

Oracle 9i: Administrator's Guide

Oracle 9i: Concepts

-----------------------------

QUESTION 190

You are defining areas on your Oracle database. Which of the following profile areas can be used to control the

resource usage for the other four?

A. LOGICAL_READS_PER_SESSION

B. CONNECT_TIME

C. COMPOSITE_LIMIT

D. CPU_PER_SESSION

E. PRIVATE_SGA

Answer: C

In this question, you must read the choices carefully. And understand what is being asked. The real question here is whether you understand resource costs and composite limits. Each of the choices other than

COMPOSITE_LIMIT can be rolled up into COMPOSITE_LIMIT with the use of resource costing. Only the

resources available for profiles can be included as part of a composite limit.

------------------------

QUESTION 200

The rows inside three Oracle tables supporting a customer order entry system are frequently accessed together

by means of a table join. Because data is always being added to the tables, you leave a lot of extra space inside

each block to accommodate growth. Which of the following types of tables would be useful for storing the data

in this context?

A. Temporary tables

B. Index-organized tables

C. Cluster tables

D. Standard Oracle tables

Answer: D

Although cluster tables initially might seem like the right answer, notice that the question states that this table

experiences frequent data change activity - the bane of a cluster table's existence. Thus, you must use standard

tables, and this choice is correct. Nothing in the question indicates that you need the functionality offered by

temporary tables. Finally, nothing in the question indicates the need for an IOT.

Wednesday, July 26, 2006

The SQL* Loader Environment

SQL*Loader is capable of loading from multiple files in one session. When multiple input files are used, SQL*Loader will generate multiple bad files and discard files--one set for each input file.

The following three datatypes are used in this control file.

- CHAR

Tells SQL*Loader that a field is a text field.

- INTEGER EXTERNAL

Tells SQL*Loader that a field is an integer represented using the text digits "0" through "9".

- DECIMAL EXTERNAL

Tells SQL*Loader that a field is a decimal value represented using the text digits "0" through "9" and an optional decimal point (".").

In the control file,

The keyword FILLER identifies the eight fields that are not being loaded into the database. Their names do not matter, but the same naming convention has been followed as for all the rest of the fields.

TIP: FILLER fields are a new feature in Oracle8i. If you are using a release prior to the Oracle8i release, SQL*Loader will not recognize the FILLER keyword.

Failover Operations Invoving a Physical Standby Database

role_management.htm#1024092

During failover operations involving a physical standby database:

- In all cases, the original primary database is removed from the Data Guard configuration

- In most cases, other logical or physical standby databases not directly participating in the failover operation remain in the configuration and do not have to be shutdown or restarted.

- In some cases, it might be necessary to re-create all standby databases after configuring the new primary database

Failover steps

Step 1 Identify and resolve any archived redo log gaps

SQL> SELECT THREAD#, LOW_SEQUENCE#, HIGH_SEQUENCE# FROM V$ARCHIVE_GAP;THREAD# LOW_SEQUENCE# HIGH_SEQUENCE#

Step 2 Copy and other missing archived redo logs

register archived redo logs

SQL> alter database register physical logfile 'filespec1';

A partial archived redo log contains all of the primary database redo data received by the standby database when the primary database fails, but the archived redo log is not automatically registered in the standby database.

Step 3 Repeat steps 1 and 2.

The query executed in step 1 displays information for the highest gap only. After resolving that gap, you must repeat steps 1 and 2 until the query in step 1 returns no rows.

Step 4 Initiate the failover operation on the target physical standby database

If your target standby database was configured with standby redo logs and you have not manually registered any partial archived redo logs, issue the following statement:

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE FINISH;

Otherwise, you must issue the following statement:

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE FINISH

2> SKIP STANDBY LOGFILE;

Step 5 Convert the physical standby database to the primary role.

Once the SQL ALTER DATABASE RECOVER MANAGED STANDBY DATABASE...FINISH statement completes successfully, transition the physical standby database to the primary database role by issuing the following SQL statement:

SQL> ALTER DATABASE COMMIT TO SWITCHOVER TO PRIMARY;

Tuesday, July 25, 2006

Suggestions for standard file extensions in SQL, PL/SQL

---------------- -------------

Package specification .pks

Package body .pkb

Package sepc and body .pkg

Procdueure .pro (or .sp for stored procedure)

Function .fun (or .sf for stored function)

Create table script(s) .tab or .ddl

Synonym creation statements .syn

Index definition .ind

Constraint definitions .con

Test scripts .tst

Verifying the logical standby database

set ORACLE_SID=

sqlplus /nolog

SQL> connect /as sysdba

SQL> startup mount

SQL> alter database guard all

SQL> alter database open

SQL> alter database start logical standby apply;

Verify (http://download-east.oracle.com/docs/cd/B10501_01/server.920/a96653/create_ls.htm#1061182)

Step 1: Verify that the redo logs have been registered

connect to logical standby and qurey:

SQL> alter session set nls_date_format = 'DD-MON-YY HH24:MI:SS';

SQL> select sequence#, first_time, next_time, dict_begin, dict_end

2> from dba_logstdby_log order by sequence#;

SEQUENCE# FIRST_TIME NEXT_TIME DIC DIC

---------- ------------------ ------------------ --- ---

794 23-JUL-06 21:54:09 23-JUL-06 21:54:12 YES NO

795 23-JUL-06 21:54:12 23-JUL-06 21:54:14 NO NO

796 23-JUL-06 21:54:14 23-JUL-06 21:54:16 NO NO

797 23-JUL-06 21:54:16 23-JUL-06 21:54:18 NO NO

798 23-JUL-06 21:54:18 23-JUL-06 21:54:20 NO YES

799 23-JUL-06 21:54:20 23-JUL-06 21:56:59 NO NO

800 23-JUL-06 21:56:59 23-JUL-06 23:20:21 NO NO

801 23-JUL-06 23:20:21 23-JUL-06 23:27:10 NO NO

802 23-JUL-06 23:27:10 24-JUL-06 23:14:50 NO NO

803 24-JUL-06 23:14:50 24-JUL-06 23:21:04 NO NO

804 24-JUL-06 23:21:04 24-JUL-06 23:21:08 NO NO

SEQUENCE# FIRST_TIME NEXT_TIME DIC DIC

---------- ------------------ ------------------ --- ---

805 24-JUL-06 23:21:08 24-JUL-06 23:21:10 NO NO

Step 2 Archive some redo logs

Connect to the primary database and archive some redo logs

SQL> alter system archive log current;

SQL> alter system archive log current;

Step 3 Query the DBA_LOGSTDBY_LOG view again

SEQUENCE# FIRST_TIME NEXT_TIME DIC DIC

---------- ------------------ ------------------ --- ---

794 23-JUL-06 21:54:09 23-JUL-06 21:54:12 YES NO

795 23-JUL-06 21:54:12 23-JUL-06 21:54:14 NO NO

796 23-JUL-06 21:54:14 23-JUL-06 21:54:16 NO NO

797 23-JUL-06 21:54:16 23-JUL-06 21:54:18 NO NO

798 23-JUL-06 21:54:18 23-JUL-06 21:54:20 NO YES

799 23-JUL-06 21:54:20 23-JUL-06 21:56:59 NO NO

800 23-JUL-06 21:56:59 23-JUL-06 23:20:21 NO NO

801 23-JUL-06 23:20:21 23-JUL-06 23:27:10 NO NO

802 23-JUL-06 23:27:10 24-JUL-06 23:14:50 NO NO

803 24-JUL-06 23:14:50 24-JUL-06 23:21:04 NO NO

804 24-JUL-06 23:21:04 24-JUL-06 23:21:08 NO NO

SEQUENCE# FIRST_TIME NEXT_TIME DIC DIC

---------- ------------------ ------------------ --- ---

805 24-JUL-06 23:21:08 24-JUL-06 23:21:10 NO NO

806 24-JUL-06 23:21:10 25-JUL-06 14:15:00 NO NO

807 25-JUL-06 14:15:00 25-JUL-06 14:15:03 NO NO

Step 4 Verify that data from the redo logs is being applied correctly

On the logical standby

SQL> select name, value from v$logstdby_stats where name='coordinator state';

coordinator state

APPLYING

Step 5 View the V$LOGSTDBY view to see current SQL apply activity

SQL> column status format a50

SQL> column type format a12

SQL> select type, high_scn, status from v$logstdby;

Step 6 Check the overall progress of log apply services

SQL> select applied_scn, newest_scn from dba_logstdby_progress;

APPLIED_SCN NEWEST_SCN

----------- ----------

360712 360712

When the numbers in the

APPLIED_SCN and NEWEST_SCN columns are equal (as shown in the query example), it means that all of the available data in the redo log was applied. These values can be compared to the values in the FIRST_CHANGE# column in the DBA_LOGSTDBY_LOG view to see how much log information has to be applied and how much remains.

Saturday, July 22, 2006

Oracle 8 Backup Test

Q. 1 : Tracy is a dba for a Large Corporation. She performs a cold backup everynight and runs the database in a noarchive log mode. Which of the following recover operations can she perform

1. Recover to Point in Time when a media failure occurs

2. Recover to the Last backup when a media failure occurs

3. Use the IMP Utility to restore the database

4. None of the above

---------------------------

ans:2 : When the database is run in a noarchivelog mode, the recovery after a media failure can only be till the last cold backup.

Q. 2 : What command is used to recover the database

1. Alter Tablespace recover database

2. Alter Database Recover Database

3. Alter Database Recover

4. Create ControlFile Recover database

-------------

ans: 2

Q. 1 : What is a Backup ?

1. A Representative Copy of Data

2. It is a background process in oracle

3. It is the Standby Server that supports the Instance incase of Failure

4. None of the Above

-------

ans: 1

Q. 2 : You are the dba for a Bank . Your data is very volatile and your recover strategy requires you to recover from a media failure. What backup strategy will you use

1. Online Backup Frequently in a Archivelog mode

2. Perform cold backups every night and exports every hour

3. Backup after each transaction

4. None of the above

-------

ans: 1 : When the data is very volatile, a frequent online backup in an archivelog mode, will keep your recovery time to a minimum

Q. 3 : As a new dba, how can you test the Backup and Recovery strategy that is currently in place

1. Wait until the production system crashed and then try recovering it

2. Gather all documentation and Verify that it is correct

3. Gather Documentation and Test the strategy on a test database and verify Offsite Storage Procedures

4. Trash the old system and design a new strategy

----------------

ans: 3 : It is very important to understand the strategy that was put in place by the old dba before you want to make any changes to the backup strategy

Q. 4 : What is a DBA's primary objective when designing a strategy

1. Time of Backup

2. Tape Size and Software Cost

3. Minimizing Data Loss and Down Time due to failure

4. None of the above

-----------------

ans: 3 : Database Availability and Minimizing Downtime should be your primary goal

Q. 5 : What are the important considerations while designing a backup strategy

1. Business Requirements

2. Database / Data Availability

3. Volatile Nature of Data

4. All of the above

-----------

ans: 4

Q. 6 : What factor plays a primary role in Backup Strategy

1. Operating System

2. Tape

3. Critical Data Availability

4. Number of Employees

-------------------------

ans:3 : Data availability parameters should always be the primary factor in designing a backup and recovery strategy

Q. 8 : Which of the following are valid questions to ask when choosing a backup recovery strategy

1. Is the Data highly volatile or Static

2. Does Management understand the trade offs involved with system availability

3. How important is the data and should all transactions be guaranteed

4. All of the above

----

ans:4

Q. 10 : Which of the following depends on your Backup and Recovery Strategy

1. Database Performance

2. PL/SQL Package Performance

3. Determination of whether complete or incomplete recovery can be performed

4. None of the above

-------------------

ans: 3

Q. 11 : Why is it important to do a business requirement analysis, when you are creating a backup recovery strategy

1. Business Users have the money

2. To assess the criticality of the data and to confirm that all the resources are available from the business group

3. To resolve all the politics between Users and Developers

4. None of the above

---------

ans 2

Q. 12 : What would be your primary concern if you have very static data

1. Data Integrity

2. Database Size

3. Fault Tolerance

4. All of the above

------

ans: 3

DS: what does this mean?

BACKUP METHOD

Q. 3 : A tablespace was converted to READ-ONLY. What should you do next

1. Backup Control File and Redo Log Files

2. Backup Control File and Parameter File

3. Backup Controlfile Only

4. Backup Datafiles that make up the read only tablespace

--------------

ans: 4

Q. 4 : What command creates a script to recreate the Control File

1. Alter database backup trace to control file

2. Alter database backup create to trace

3. Alter database backup controlfile to trace

4. None of the above

--------

ans:3 : Alter database backup control file to trace produces a trace file in the DUMP_DEST. This file will contain all the commands required to recreate the control file and mount the database

Q. 5 : When an Online database backup is performed you are performing a database backup at this level

1. Table Level

2. Tablespace Level

3. Data File Level

4. Database Level

-----------------------

ans: 2 : In a hot backup scenario, you will put each tablespace in a backup mode and then backup the datafiles related to the tablespace

Q. 6 : What is the best shutdown option before a cold backup

1. FORCE

2. NORMAL

3. ABORT

4. Any of the Above

---------------------

ans:2 : A database has to be shutdown Normal or Immediate before a cold Backup. But Normal is the best way since it guarantees the consistency of the database.

Q. 7 : What is a Physical backup

1. Operating System Level backup of files without regard to logical content

2. Export of databases

3. Extract of DDL and then backup on to Tape

4. None of the above

--------------

ans: 1

Q. 8 : Online Backup Procedures are very powerful due to these reasons

1. Database remains open during backups

2. Full Point-in-time Recovery

3. All of the above

4. None of the above

-------------------

ans: 3

FAILURE SCENARY

Q. 1 : What are valid causes of User Process Failure

1. Abnormal Session Termination

2. Program Address Exception

3. Abnormal Disconnect

4. All of the above

-------------

ans: 4

Q. 2 : Tom executed a DDL statement in SQL PLUS. Oracle reported an Invalid Syntax Error. What Kind of Failure has tom experienced

1. Statement Failure

2. Instance Failure

3. Application Failure

4. Media Failure

-----------

ans:1 : A statement failure occurs when there is a logical failure due to a bad SQL statement.

Q. 5 : Which of the following are valid causes for Instance Failure

1. Power Outage

2. Hardware Problems

3. Background Process Failure

4. All of the above

-------------------------

ans: 4

Q. 6 : Tom's Database Connection was dropped due to a network Failure. What kind of Failure did tom experience now.

1. Instance Failure

2. Media Failure

3. TCP/IP Failure

4. Process Failure

----------

ans: 4 : A process failure is a failure in user process. This will not impact the system, but will not let the user continue with his work

Q. 7 : Lisa tried to enter null values in a non null column, what kind of failure would occur

1. User Process Failure

2. Instance Failure

3. Hardware Failure

4. Statement Failure

----

ans: 4

Q. 9 : What is Media Failure ?

1. The database cannot house any more audio files

2. The Hard Disk head crashed and cannot read or write to disk

3. Dba flushed the SGA

4. None of the above

------------------

ans:2 : An error can arise when trying to write or read a file that is required to operate the database. This is called disk failure because there is a physical problem reading or writing physical files on disk. A common example is a disk head crash, which causes the loss of all files on a disk drive. Different files may be affected by this type of disk failure, including the datafiles, the redo log files, and the control files. Also, because the database instance cannot continue to function properly, the data in the database buffers of the system global area cannot be permanently written to the datafiles.

Q. 11 : Zacks user process failed. What should the dba do to fix this

1. Reboot Client Machine

2. Reboot Server

3. Apply Redo Logs and then recover the database

4. None of the above

---------------------

ans: 4 : PMON will take care of the abnormally disconnected process

Q. 12 : What should you do as a DBA if the instance failed due to the failure in SMON Process

1. Reboot the Server

2. Lsnrctl start smon

3. svrmgrl startup smon

4. None of the above

--------------

ans: 4 : Restart the instance to restart SMON.

Q. 1 : What is archived log mode ?

1. Redo Logs are Not created in this mode

2. Redo Logs are Archived before they are

3. Redo Logs are overwritten before archiving them

4. None of the above

------------------------

ans: 2 : Oracle writes to the online redo log files in a cyclical fashion. After filling the first log, it writes to the second and so on. When running in ArchiveLog Mode, the ARCH process makes a copy of the log before overwriting them

Q. 3 : What happens when tablespaces are put in backup mode for hot backups

1. High Volume of REDO is generated

2. No updates to tables in that tablespace

3. All of the above

4. None of the above

----------------------

ans: 1 : When the tablespace is put in a backup mode for Hot Backups excessive redo is generated

Q. 1 : What option of Exporting allows quicker data extractions

1. Grants =y

2. Consistent =y

3. Direct = true

4. Direct=y

-----------------------

ans:4 : Direct=y option bypasses the SQL layer to allow quicker data extractions

Q. 3 : What are the 3 levels of Exports

1. GLOBAL, USER, TABLESPACE

2. FULL, USER, TABLE

3. FULL, TABLESPACE, TABLE

4. GLOBAL, LOCAL, TRANSACTIONAL

--------------------------------------------

ans: 2 : Export can be done at a Full, User and Table Level

Q. 7 : What can you do to reduce the burden on a rollback segment during the import of a large table

1. Assign a Large Rollback Segment

2. SET TRANSACTION USE ROLLBACK SEGMENT RBSLARGE

3. COMMIT=Y

4. None of the above

---------------------

ans: 3 : Commit=y will reduce the unnecessary burden on the rollback segments when importing large tables

Q. 8 : What is an Incremental Export

1. Export of the objects that have changed since last export

2. Export of rows that have changed since last export

3. Incremental Exports cannot be performed in Oracle

4. None of the above

------------

ans: 1

Q. 9 : An Incremental Export is a good strategy for

1. Applications with few large tables and less data changes

2. Applications where changes are scattered across small tables

3. All of the above

4. None of the above

-----------

ans: 2

Q. 14 : What sequence is followed during an import

1. Tables, Indexes, Triggers, Constraints

2. Tables, Triggers, Constraints, Indexes

3. Tables, Indexes, Sequences, Constraints, Triggers

4. None of the above

----------------------

ans: 1

Q. 15 : Which step of the query process is by passed by direct-path export

1. SQL Command Processing Layer

2. Space Management

3. Buffer Cache

4. All of the above

-------------

ans: 1

Q. 17 : Data Loss can occur if you use an Export / Import Strategy Because

1. Rollback Segment contents are Not written to the Export file

2. Archive Log Contents Cannot be Applied After an Import

3. Export may fail due to Referential Integrity constraints

4. None of the above

--------------------

ans: 2

Q. 23 : How can you create a file containing the scripts to create indexes from the export

1. Using the INDEXFILE Option

2. Using the INDEXES option

3. All of the above

4. None of the above

-----------

ans:1 : INDEXFILE option can be used during imports to create a file of Index and Table creation scripts.

-------------

Creating a Logical Standby Database (failed)

ref: http://download-east.oracle.com/docs/cd

/B10501_01/server.920/a96653/create_ls.htm#1070616

1. Identify the primary database datafile and logfile

SQL> select name from v$datafile

NAME

--------------------------------------------------------------------------------

/opt/app/oracle/oradata/ntbkdb1/system01.dbf

/opt/app/oracle/oradata/ntbkdb1/undotbs01.dbf

/opt/app/oracle/oradata/ntbkdb1/drsys01.dbf

/opt/app/oracle/oradata/ntbkdb1/example01.dbf

/opt/app/oracle/oradata/ntbkdb1/indx01.dbf

/opt/app/oracle/oradata/ntbkdb1/tools01.dbf

/opt/app/oracle/oradata/ntbkdb1/users01.dbf

/opt/app/oracle/oradata/ntbkdb1/xdb01.dbf

/opt/app/oracle/oradata/ntbkdb1/logmnrts.dbf

SQL> select group#, type, member from v$logfile;

GROUP# TYPE MEMBER

---------- ------- --------------------------------------------------------------------------------

1 ONLINE /opt/app/oracle/oradata/ntbkdb1/redo01.log

2 ONLINE /opt/app/oracle/oradata/ntbkdb1/redo02.log

3 ONLINE /opt/app/oracle/oradata/ntbkdb1/redo03.log

2. Make a Copy of the Primary Database

Step 1 Shutdown the primary database

Step 2 Copy the datafiles to a temporary place

% cp /opt/app/oracle/oradata/ntbkdb1/system01.dbf ~/ntbkdb1_stby/

...

% cp /opt/app/oracle/oradata/ntbkdb1/logmnrts.dbf ~/ntbkdb1_stby/

Step 3 Restart the primary database

Step 4 Create a backup copy of the control file for the standby database.

SQL> ALTER DATABASE BACKUP CONTROLFILE TO

2> '/home/oracle/ntbkdb1_stby/ntbkdb2.ctl';

note: here user ~ for /home/oracle is not valid

Step 5 Enable restricted session mode

SQL> alter system enable restricted session;

Step 6 Build the LogMiner Dictionary

SQL> execute dbms_logstdby.build;

Step 7 Disable restricted session mode

SQL> alter system disable restricted session;

Step 8 Identify the latest archived redo log

SQL> alter system archive log current;

SQL> SELECT NAME FROM V$ARCHIVED_LOG

2> WHERE (SEQUENCE#=(SELECT MAX(SEQUENCE#) FROM V$ARCHIVED_LOG

3> WHERE DICTIONARY_BEGIN = 'YES' AND STANDBY_DEST= 'NO'));

NAME

------------------------------------------------------------------------

/opt/app/oracle/oradata/ntbkdb1/arch/ntbkdb1_arch782.arc

3. Create a text version of initiliazaton parameter files

SQL> create pfile='/home/oracle/ntbkdb1_stby/initntbkdb2.ora'

from spfile;

4 Copy Files from the Primary Database Location to

the Standby Location

On the primary database, use an operating system copy utility to copy

the following binary files from the primary database

site to the standby site:

- Backup datafiles and control files created in 2.

- Latest archived redo log that was identified in step 8 of Section 2.

- Database initialization parameter file created in Section 3.

5. Set the initilization parameter file for the logic standby database

Caution:

Review the initialization parameter file for additional parameters that

may need to be modified. For example, you may need to modify the dump

destination parameters (background_dump_dest, core_dump_dest,

user_dump_dest) if the directory location on the standby database is

different from those specified on the primary database. In addition,

you may have to create some directories on the standby system if they

do not already exist.

6. create a window service ( not for unix system)

7. Configure listner for both primary and standby site8. Enable Dead Connectiong Detection on the Standby System

in sqlnet.ora on the standby system, set

sqlnet.expire_time=2

9. Create Oracle Net Service Names

10. Start and mount the logical standby databatase

SQL> startup mount pfile='/home/oracle/ntbkdb1_stby/initntbkdb2.ora'

ORACLE instance started.

Total System Global Area 68228236 bytes

Fixed Size 451724 bytes

Variable Size 50331648 bytes

Database Buffers 16777216 bytes

Redo Buffers 667648 bytes

ORA-01990: error opening password file

'/opt/app/oracle/product/9.2.0.4/dbs/orapw'

ORA-27037: unable to obtain file status

Linux Error: 2: No such file or directory

Additional information: 3

fix:

orapwd file=orapwntbkdb2 password =oracle entries=8

after the fix no ORA-01990 etc errors

-------------------------------------------------------------------

Jul 23, 2006 11:30 AM -- continue to create a logical standby database

repeat step 10 first

11. Rename Data Files on the Logical Standby Database

12. Rename online redo log on the logical standby database

copied the online redo log to the new locaiton first

13. Turn on the database guard

sql> alter database guard all;

sql> alter database open resetlogs;

SQL> alter database open resetlogs;

alter database open resetlogs

*

ERROR at line 1:

ORA-01152: file 1 was not restored from a sufficiently old backup

ORA-01110: data file 1: '/home/oracle/ntbkdb1_stby/system01.dbf'

!!!!!

This practise has problems. Do it again from begining!!

Intelligent Agent starting problem under Linux (FC3)- Applying Patch to fix

Problem: Trying to start intelligent agent, failed as shown below:

------------------------------------------------------------

[oracle@YS02 bin]$ agentctl start agent

DBSNMP for Linux: Version 9.2.0.4.0 - Production on 22-JUL-2006 14:20:19

Copyright (c) 2003 Oracle Corporation. All rights reserved.

Starting Oracle Intelligent Agent.../opt/app/oracle/product/9.2.0.4/bin/dbsnmpwd : line 156: 3891 Segmentation fault nohup $ORACLE_HOME/bin/dbsnmp $* >>$DB SNMP_WDLOGFILE 2>&1

/opt/app/oracle/product/9.2.0.4/bin/dbsnmpwd: line 156: 3904 Segmentation fault nohup $ORACLE_HOME/bin/dbsnmp $* >>$DBSNMP_WDLOGFILE 2>&1

/opt/app/oracle/product/9.2.0.4/bin/dbsnmpwd: line 156: 3916 Segmentation fault nohup $ORACLE_HOME/bin/dbsnmp $* >>$DBSNMP_WDLOGFILE 2>&1

/opt/app/oracle/product/9.2.0.4/bin/dbsnmpwd: line 156: 3928 Segmentation fault nohup $ORACLE_HOME/bin/dbsnmp $* >>$DBSNMP_WDLOGFILE 2>&1

-----------------------------------------------------------------------------------------------

Solution: Applying Patch

Jul 23, 2006 11:00 AM -- fix the intelligent agent starting problem

(1) Install OPatch user ORACLE_HOME

(2) Applying patch p3238244

% unzip

% cd 3238244

% $ORACLE_HOME/OPatch/opatch apply

(3) To find which makefile handles the linking of dbsnmp:

% find $ORACLE_HOME -name "*.mk" xargs grep -l dbsnmp

/opt/app/oracle/product/9.2.0.4/network/lib/ins_oemagent.mk

/opt/app/oracle/product/9.2.0.4/network/lib/env_oemagent.mk

(4) relink

$ su - oracle

$ cd $ORACLE_HOME/network/lib

$ make -f ins_oemagent.mk install

(5) start the agent successfully

[oracle@YS02 lib]$ agentctl start agent

DBSNMP for Linux: Version 9.2.0.4.0 - Production on 23-JUL-2006 11:13:24

Copyright (c) 2003 Oracle Corporation. All rights reserved.

Starting Oracle Intelligent Agent....

Agent started

Friday, July 21, 2006

Oracle Data Guard - Role Management

switchover

- a reversible role transition between the primary database and one of its standby databases

failover

- tansitions a standby database to the primary role in response to a failure of the primary database

During any role transition, the amount of downtime required to complete the operation, the potential for data loss, and the effects on other standby databases in the configuration are determined by:

- The state of the primary database just before the transition

- The state of the standby database selected for the role transition at the time of the transition

- If the selected standby database was configured as a physical standby database or a logical standby database

- If the role transition is a switchover or a failover

Switch over operation

- used to reduce primary database downtime during planned outages, such as OS or hardware upgrades- takes place in two phases:-- (1) an existing primary database is transitioned to a standby role

-- (2) a standby database is transitioned to the primary role

Note:

Because the primary and standby database releases must be the same at all times, do not use a switchover operation to perform a rolling upgrade of Oracle database software. However, it might be possible to use a switchover operation to perform a rolling upgrade of

system hardware.

Jul 21, 06 2:33 PM

Practise: perform a switchover to physical standbyOn the current primary database

Step 1 Verify that it is possible to perform a switchover operation

SQL> select switchover_status from v$database;

expected to see: TO STANDBY

In my case showed: SESSION ACTIVE

maybe it's due to I haven't set up the initailization parameters for role transition.

Now following 5.8.2 to set up initialization parameters for swithover:

(1) create init.ora files on primary site, directly edit the parameters there

specify the following paramters in the initoemrep.ora file

Example 5-7 Primary Database: Standby Role Initialization Parameters

FAL_SERVER=oemrep2

FAL_CLIENT=oemrep

DB_FILE_NAME_CONVERT=('/standby','/primary')

LOG_FILE_NAME_CONVERT=('/standby','/primary')

STANDBY_ARCHIVE_DEST=/disk1/oracle/oradata/payroll/

STANDBY_FILE_MANAGEMENT=AUTO

Notes: it seems that *_covert paramters can not be set through 'alter system set ...' command

(2) on the standby site:

SQL> alter system set log_archive_dest_2='SERVICE=oemrep';

SQL> alter system set log_archive_dest_state_2=enable ( Note: already enabled)

SQL> alter system set fal_server=oemrep

SQL> alter system set fal_client=oemrep2

(3) shutdown the primary db and startup using pfile.

verifying all the parameters are set for switch over

create spfile from pfile

(4)

On the original primary database (the new standby) set this parameter to allow the receipt of the redo from the new primary database. For example:

SQL> ALTER SYSTEM SET REMOTE_ARCHIVE_ENABLE=RECEIVE SCOPE=MEMORY;

On the new primary database (the former standby) set this initialization parameter to allow the sending of redo to the standby database.SQL> ALTER SYSTEM SET REMOTE_ARCHIVE_ENABLE=SEND SCOPE=MEMORY;

Now verifying again on primary db

SQL> select switchover_status from v$database;

still get session_active

close the session on standby db

DOUBLE CHECK INITILIZATON PARAMETERS:

Primary Database: Primary Role Initialization Parameter

log_archive_dest_1 = 'LOCATION=e:\oracle\oradata\oemrep mandatory'

log_archive_dest_2 = 'SERVICE=oemrep2'

log_archive_dest_state_1=ENABLE

log_archive_dest_state_2=ENABLE

log_archive_format=oemrep_arch%s.arc

remote_archive_enable=true

Primary Database: Standby Role Initialization Parameter

fal_server=OEMREP2

fal_client=OEMREP

db_file_name_convert=('e:\oracle\oradata\standby\oemrep2\','e:\oracle\oradata\oemrep\')

log_file_name_convert=('e:\oracle\oradata\standby\oemrep2\','e:\oracle\oradata\oemrep\')

standby_archive_dest= e:\oracle\oradata\oemrep

standby_file_management=AUTO

(FAL: fetch archive log)

Standby Database: Standby Role Initilization Parameters

fal_server=OEMREP

fal_client=OEMREP2

db_file_name_convert=('e:\oracle\oradata\oemrep\', 'e:\oracle\oradata\standby\oemrep2\')

log_file_name_convert=('e:\oracle\oradata\oemrep\', 'e:\oracle\oradata\standby\oemrep2\')

standby_archive_dest=e:\oracle\oradata\standby\oemrep2

standby_file_management=AUTO

log_archive_dest_1='LOCATION=e:\oracle\oradata\standby\oemrep2'

log_archive_dest_state_1=enable

log_archive_format=oemrep_arch%s.arc

remote_archive_enable=TRUE

Standby Database: Primary Role Initialization Parameters

log_archive_dest_2='SERVICE=oemrep'

log_archive_dest_state_2=enable

11:48am continue - perform switchover

Enabling Initialization Parameters During Role Transition

On the original primary database (the new standby)

For example:

SQL> ALTER SYSTEM SET REMOTE_ARCHIVE_ENABLE=RECEIVE SCOPE=MEMORY;

On the new primary database (the former standby)

SQL> ALTER SYSTEM SET REMOTE_ARCHIVE_ENABLE=SEND SCOPE=MEMORY;

(in my case I set the 'TRUE', so no need to perform this step)

=> do Step 1 again: Verify that it is possible to perform a switchover operation.

SQL> select switchover_status from v$database

still got session active; igore this continue

Step 2 Initiate the switchover operation on the primary database.

on the primary database:

SQL> ALTER DATABASE COMMIT TO SWITCHOVER TO PHYSICAL STANDBY;

instead, I use:

SQL> alter database commit to switchover to standby with session shutdown;

Step 3 Shut down and restart the former primary instance.

Shut down the former primary instance and restart it without mounting the database:

SQL> SHUTDOWN IMMEDIATE;

SQL> STARTUP NOMOUNT;

Mount the database as a physical standby database:

SQL> ALTER DATABASE MOUNT STANDBY DATABASE;

At this point in the switchover process, both databases are configured as standby databases

On the target physical standby database

Step 4 Verify the switchover status in the V$DATABASE view.

After you transition the primary database to the physical standby role and the switchover notification is received by the standby databases in the configuration, you should verify if the switchover notification was processed by the target standby database by querying the SWITCHOVER_STATUS column of the V$DATABASE fixed view on the target standby database.

For example:

SQL> SELECT SWITCHOVER_STATUS FROM V$DATABASE;

SWITCHOVER_STATUS

-----------------

SWITCHOVER PENDING

1 row selected

DS. got 'session active' ignore continue

Step 5 Switch the physical standby database role to the primary role.

SQL>alter database commit to swithover to primary with session shutdown;

Step 6 Shut down and restart the new primary database.

Shut down the target standby instance and restart it using the appropriate initialization parameters for the primary role:

SQL> SHUTDOWN;

SQL> STARTUP;

Step 7 Start managed recovery operations and log apply services.

Issue the following statement to begin managed recovery operations on the new physical standby database:

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE DISCONNECT FROM SESSION;

On the new primary database

Step 8 Begin sending redo data to the standby databases.

Issue the following statement on the new primary database:

SQL> ALTER SYSTEM ARCHIVE LOG CURRENT;

---- practise of switchover to a physcical standby ended ---

Note: SQL> alter system set remote_archive_enable=send;

alter system set remote_archive_enable=send

*ERROR at line 1: ORA-02095: specified initialization parameter cannot be modified

DS: this is due to the remote_archive_enable is a static parameter

Managing a Physical Standby Database

8.1.1 Starting up a Physical Standby Database

1. Start the database:

SQL> STARTUP NOMOUNT;

2. Mount the standby database:

SQL> ALTER DATABASE MOUNT STANDBY DATABASE;

3. Start the managed recovery operation:

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE

2> DISCONNECT FROM SESSION;

Note: here has the option to open the dabase for read-only access

8.1.2. Shutting down a physical standby database

The following steps show you how to shut down a standby database:

1. Find out if the standby database is performing managed recovery. If the MRP0 or MRP process exists, then the standby database is performing managed recovery.

SQL> SELECT PROCESS, STATUS FROM V$MANAGED_STANDBY;

2. Cancel managed recovery operations.

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL;

3. Shut down the standby database.

SQL> SHUTDOWN IMMEDIATE;

8.2.2 Opening a Standby Database for Read-Only Access

1) To open a standby database for read-only access when it is currently shut down:

SQL> STARTUP NOMOUNT;

SQL> ALTER DATABASE MOUNT STANDBY DATABASE;

SQL> ALTER DATABASE OPEN READ ONLY;

2) To open a standby database for read-only access when it is currently performing managed recovery:

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL;

SQL> ALTER DATABASE OPEN READ ONLY;

3) To change the standby database from being open for read-only access to performing managed recovery:

1. Terminate all active user sessions on the standby database.

2. Restart log apply services:

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE

2> DISCONNECT FROM SESSION;

RMAN backup and recovery of a primary database using a standby database

- use the physical standby database to off-load the database backup operation from the primary database

- Using RMAN at the standby site, you can back up the datafiles and the archived redo logs while the standby database is performing managed recovery.

see Oracle9i Recovery Manager User's Guide

8.4 Managing Primary Database Events That Affect the Standby Database (stop here)

Wednesday, July 19, 2006

Log Apply Service

??> What this means: Archived redo data is not available for log apply services until a log switch occurs on the primary database.

DS: suppose the current archived redo sequence is 75, the last applied archived redo seq# maybe 74 (not 75), only next time the log swtich occurs on the primary db, the 75 will be available for being applied

Task List: Configuring Log Apply Services for Physcial Standy Databases

1. Start the standby instance and mount the standby database.

SQL> startup nomount;

SQL> alter database mount standby database;

2. Enable managed recovery or read-only operations

- to start a foreground session:

SQL> alter database recover managed standby database;

- to start a background process:

SQL> alter database recover managed standby database disconnect;

- stop log apply services

SQL> alter database recover managed standby database cancel;

3. If performing managed recovery operations, set initilization parameters to automatically resolve archive gaps.

4. Monitor log apply services.

(1) verify log apply services are initiated correctly

SQL> select process, status, thread#, sequence#, block#, blocks

2> from v$managed_standby;

(2) v$archive_dest_status

An archive gap is a range of archived redo logs created whenever the standby system is unable to receive the next archived redo log generated by the primary database

e.g network becomes unavailable

Every minute, the primary database polls its standby databases to see if there is a gap in the sequence of archived redo logs. The polling between the primary and standby databases is sometimes referred to as a heartbeat. The primary database polls the standby databases serially.

To determine if there is an archive gap on the physical standby database:

SQL> select * from v$archive_gap;

6.5 Monitoring Log Apply Services for Physical Standby Databases

7/20/06 10:37 AM practice:

1. accessing the v$managed_standby fixed view to monitor log apply and log transport activity at standby site

3 Accessing the V$ARCHIVED_LOG Fixed View

to show all the archived redo logs received from the primary database.

SQL> SELECT REGISTRAR, CREATOR, THREAD#, SEQUENCE#, FIRST_CHANGE#,

2> NEXT_CHANGE# FROM V$ARCHIVED_LOG;

??> under creator column, I see 'ARCH' and ' FGRD', what the FGRD stands for

Tuesday, July 18, 2006

Creating a Physical Standby Database - Log transport services

Ref: http://download-west.oracle.com/docs/cd/

Checklist

3.2.1 Identify the Primary Database Datafiles (Primary)

3.2.2 Make a Copy of the Primary Database (Primary)

3.2.3 Create a Control File for the Standby Database (Primary)

3.2.4 Prepare the Initialization Parameter File to be Copied to the Standby Database (Primary)

3.2.5 Copy Files from the Primary System to the Standby System (Primary)

3.2.6 Set Initialization Parameters on a Physical Standby Database (Standby)

3.2.7 Create a Windows Service (Standby)

3.2.8 Configure Listeners for the Primary and Standby Databases (Primary and Standby)

3.2.9 Enable Dead Connection Detection on the Standby System (Standby)

3.2.10 Create Oracle Net Service Names (Primary and Standby)

3.2.11 Create a Server Parameter File for the Standby Database (Standby)

3.2.12 Start the Physical Standby Database (Standby)

3.2.13 Initiate Log Apply Services (Standby)

3.2.14 Enable Archiving to the Physical Standby Database (Primary)

7/19/06 11:57 AM Practice

- While primary database is runing, to start the standby database and verify everything is working, do the following:

(1) Start the Physical Standby Database (in the same system as the primary)

c:> set ORACLE_SID=oemrep2

c:> sqlplus /nolog

SQL> connect /as sysdba

SQL> startup nomount;

(DS: if just type 'startup;', will see 'ORA-01666: controlfile is for a standby database'.

And the database is not mounted)

SQL> alter database mount standby database;

(2) To test: on the primary db, under scott, create a tab mytab1 and insert some rows

SQL> select * from mytab1;

A B

---------- ----------

1 1

2 2

3 3

(3) initiate the Log Apply services

SQL> alter database recover managed standby database disconnect from session

(4) On the primary db, verifying

LOG_ARCHIVE_DEST_2=oemrep2

LOG_ARCHIVE_DEST_STATE_2=ENABLE

(5) start remote archiving (not remote in this case) on the primary db

ALTER system archive log current

(6) Verifying the physcal standby database

step 1 Identify the existing archived redo logs

SQL> select sequence#, first_time, next_time

2 > from v$archived_log order by sequence#;

step 2 Archiving the current log

SQL> alter system archive log current

step 3 Verify that the new archived redo log was received

SQL> select sequence#, first_time, next_time

2 from v$archived_log order by sequence#;

step 4 Verify that the new archived redo log was applied

SQL> select sequence#, applied from v$archived_log order by sequence#;

(??> Notes: there can be duplicate entries with one shows 'NO', the other shows 'Yes' under 'applied' column; note the creator column is different)

SQL> select sequence#, applied, creator from v$archived_log where sequence#=73;

SEQUENCE# APP CREATOR

---------- --- -------

73 NO FGRD

73 YES ARCH

(note: when trying to shutdown standby db recieve:ORA-16175: cannot shut down database when media recovery is active

--------- Practicse Ends Here --------------------------------------

Log transport services

Log apply services

role management services

Log transport services, related concepts:

- Redo logs

- Redo log destinations

- Transmission and reception of redo log

- Data protection

A Data Guard configuration in one of three data protection mode:

- Maximum protection

- Maximum availability

- Maximum performance

Size of online redo logs

- most important factor: the amount of appliaction data that needs to be applied to a standby database during a database failover operation

REMOTE_ARCHIVE_ENABLE initialization parameter

- permission for archiving of online redo logs to remote destination

- TRUE, FALSE, SEND, RECEIVE

CONTROL_FILE_RECORD_KEEP_TIME initialization parameter

- minimum number of days that must pass before a reusable record in the conntrol file can be resued

5.3.3 Standby Redo Logs

- similar to online redo logs

- required for physical standby databases running in maximum protection mode and maximum availability mode

Remote file server process

The following parameters limit the number of standby redo log groups that you can add to a database:

- MAXLOGFILES clause of the CREATE DATABASE statement for the primary database determines the maximum number of groups of standby redo logs per physical standby database. The only way to override this limit is to re-create the primary database or control file.

- LOG_FILES parameter can temporarily decrease the maximum number of groups of standby redo logs for the duration of the current instance.

- MAXLOGMEMBERS clause of the CREATE DATABASE statement used for the primary database determines the maximum number of members per group. The only way to override this limit is to re-create the primary database or control file.

OraFAQ Forum Reading Notes - Data Guard

( http://www.orafaq.com/forum/f/12/0/ )

Re: How to disable transfers of LOGS to standby database

Try:

SQL> ALTER SYSTEM SET log_archive_dest_state_2 = DEFER;

System altered.

DEFER = Specifies that valid destination information and attributes are preserved, but the destination is excluded from archiving operations until re-enabled

SUBJECT: log_sequence column in v$archive_dest

In my dataguard setup, in which 2 primary RAC instances are sending archive logs to a Physical standby database, the log_Sequence value in V$ARCHIVE_DEST view is not getting updated.

1 select ads.dest_id,max(sequence#) "Current Sequence",

2 max(log_sequence) "Last Archived"

3 from v$archived_log al, v$archive_dest ad, v$archive_dest_status ads

4 where ad.dest_id=al.dest_id

5 and al.dest_id=ads.dest_id

6* group by ads.dest_id

DEST_ID Current Sequence Last Archived

---------- ---------------- -------------

1 38743 38743 2 38743 0 3 38726 0

There is no problem with the dataguard, and it applies the archive logs correctly and is in sync with PROD.

Our dataguard monitoring script checks the archive log gap based on the values in this column,and METALINK also says the same thing.

From Metalink: (Note: 241374.1)Quote:

-- The following query will determine the current sequence number

-- and the last sequence archived.

If remotely archiving using the-- ARCH process then the archived sequence should be equal to the-- current sequence. The applied sequence information is updated at-- log switch time.

select ads.dest_id,max(sequence#) "Current Sequence",max(log_sequence) "Last Archived"from v$archived_log al, v$archive_dest ad, v$archive_dest_status ads where ad.dest_id=al.dest_id and al.dest_id=ads.dest_id group by ads.dest_id;

Would you please throw some light, as why this is not getting updated.

// -----------------------------------------------------------------------

Q: we are planning a switchover making the standby as the primary.We will not be making the old primary db to standby. Application testers want old primary back as primary and test the application with both old primary and new primary.In this case, How can I make the old primary back to a normal PROD database?? After running, "Alter database commit to switchover to physical standby", it will create the standby control file, right?? So is the control file overwritten in this case?? Do I need to recreate the control file ??

A:Try to do a fail-over instead of a switch-over. After a fail-over you should have 2 primary databases with no data guard.

( messeage read till 16-Jun-06)

--------------------------

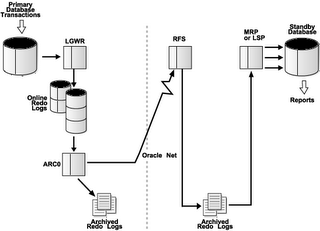

SUBJECT: 2x standby db - 1 using redo apply & 1 using sql apply

HiWe are wanting to setup 2 standby databases - 1 for DR/failover using redo apply and 1 for reporting using SQL apply.The first standby db is strictly for failover as the Primary db is a 24x7 system. The second db will allow us to add Summary tables & Materialized views around the core data and remove all reports processing away from the Primary.Oracle documentation says you can have up to 9 standby databases, but doesn't say they can be populated by the 2 different methods (redo & SQL apply). I'm assuming we can, but would like to know if anyone else has successfully setup a similar scenario before investing large amounts of time.thanks

Ans:

As a short answer - yes.We have successfully tested setting up both a physical and logical standby on the same primary - basically for the same reasons you are looking to do it. We are working toward rolling this out in our production environment soon.Our environment is a 10GR1 4 node RAC cluster with physical replication to a single node DR site and soon logical standby to a single node reporting environment.

File organization

book: Database management systems

heap file

- record in a ~ are stored in random order across the pages of the file.

record id (rid)

- Each record in a file has a unique identifier called a record id, or rid for short. An rid has the property that we can identify the disk address of the page containing the record by using the rid.

Page 276

There are three main alternatives for what to store as a data enery in an index

(1) A data entry k* is an actual data record( with search key value k)

(2) A data entry is a

(3) A data entry is a

Clustered Indexes:

- When a file is organized so that the ordering of data records is the same as or close to the ordering of data entries in some index, the index is said to be clustered; otherwise, it is an unclustered index.

8.4 COMPARISON OF FILE ORGANIZATIONS

Assume

- files and indexes are organized according to the composite search key

- All selection operations are specified on these fields

Organizations:

- File of randomly ordered employee records, or heap file

- File of employee records sorted on

- Clustered B+ tree file with search key

- Heap file with an unclustered B+ tree index on

- Heap file with an unclustered hash index on

Cost model:

- 'B' denotes the number of data pages for a table

- 'R' the number of records per page-

Dynamic Performance Tables

- a set o f virtual tables

- SYS owns ows the ~; their names all begin with V_$

- Views are created on these tables

- Public synonyms are created for the views; the synonym names begin with V$

Notes:

The actual dynamic performance views are identified by the prefix V_$. Public synonyms for these views have the prefix V$. Database administrators and other users should access only the V$ objects, not the V_$ objects.

Note:

SYSTEM can access V$ view; however, in the triggers that owned by SYSTEM, access V$view is not allowed. privilledge obtained through roles

Notes: The problem is that you have access to v$session through a role and therefore cannot create any objects such as triggers, views or procedures which access it. Try logging on as SYS and giving a direct grant select to the user.

9i database reference

http://download-east.oracle.com/docs/cd/B10501_01/server.920/a96536/ch3.htm#1109139

Saturday, July 15, 2006

Oracle Processes

- User processes

- Oracle proceses

Oracle Processes

- Server Proceses

-- Created to handle requests from connected user processes

-- Configuration: dedicated server, shared server

- Background Proceses

-- DBWn, LGWR, CKPT, SMON, PMON, ARCn, RECO, Jnnn, Dnnn, LMS, QMNn

Database Writer (DBWn)

- writes modified blocks from the database buffer cache to the datafile

- Can have many(20 ?) DBWR process: DBW0 to DBW9, DBWa to DBWj

- Writing occurs for two main reasons

-- need to read more blocks reqired by users whereas there is no free space in the buffer cache

-- checkpointing

Log Writer(LGWR)

- Writes redo information from the log buffer in the SGA to all copies of the current redo log file on disk

Checkpoint(CKPT)

- At specific times, all modified database buffer in the SGA are written to the datafiles by DBWn. This event is called a checkpoint

- The checkpoint process is responsible for

-- signaling DBWn at checkpoints

-- updating all the datafiles and control files ( in their headers) of the database to indicate the most recent checkpoint.

notes: distinguish checkpoint event or operation from checkpoint process

System Monitor(SMON)

- Performs recovery when a failed instance starts up again

- With RAC, the SMON process of one instance can perform instance recovery for other instances that have failed

- Clean up temporary segments

- Recovers terminated transactions skipped during recovery because of file-read or offline errors (These transactions are eventually recovered by SMON when the tablespace or file is brought back online.

- Coalesces free extents in the dictionary managed tablespace

Process Monitor (PMON)

- Peforms process recovery when a user process fails

- Cleaning up the cache and freeing resources ( releasing any locks)

- Checks on dispatcher and server processes and restarts them if they have failed

Archiver(ARCn)

- Copies the online redo log files to archival storage after a log switch has occured

- Can specify up to 10 ARCn processes

- Active only a database is in ARCHIVELOG mode and automatic archiving is enabled

Recoverer(RECO)

- Used to resolve distributed transactions that are pending due to a network or system failure in a distributed database

Job Queue Processes(Jnnn)

- Used for batch processing

Dispatcher(Dnnn)

- In a shared server configuration, routing requests from connected user processes to available shared server processes and returning the responses back to the appropriate user processes.

Lock Manager Server(LMS)

- used for inter-instance locking in RAC

Queue Monitor(QMNn)

- (Optional) monitor the message queues for Oracle Advanced Queuing.

- Configure up to 10 queue monitor

Friday, July 14, 2006

Peformance Tuning Basic Concepts

bad performance: recognized easily

good performace: absence of bad performance

Three basic steps:

- Define performance problems

- Oracle Server software

- Server machine

Beyond Oracel server software and machive level, performance problem may lie in the design of

application or the database itself

Oracle Server Performance

Looking bottlenecks in Oracle server software itself

- OEM; lower than optimal use of internal resource

- own scripts: monitoring or auditing

- session waiting unnecessarily

- dynamic perform views: V$SYSTEM_EVENT; V$SESSION_WAIT;

pinpoit; foucusing on ; the largest source of contension

- source of problem: e.g.:

* lower-than-expected database buffer cache hit ratio

- simply increase the initialization parameter DB_BLOCK_BUFFERS

* long time to fetch database rows from the disk: calls for more investigation into the usage of the overall resource, more difficult to locate the specific problem

Machine Resource Usage

CPU

Memory

DISK I/O

Network bandwidth

E.g.

run out of physical memory; swap areas of memory out to the disk; cause I/O bottleneck

- identify machine resource usage

UNIX: sar, iostat, vmstat

Wednesday, July 05, 2006

Setting and Configuring the Workload Generator

ref: http://www.oracle.com/technology/obe/obe9ir2/obe-mng/wkldgen/wkldgen.htm

Loading the Data

-------------------

1. Download wkldgen.zip and unzip it into your working directory (e:\training-OracleDBA\oraexe\wkldgen).

2. Edit the following bat file:

OE_large_cre_popul.bat

OE_large_demo.bat

OE_large_drop.bat

Make sure that the working directory is set to your physicaly working directory path.

(YS: will directly execute sql not bat )

3. Open a DOS Command Prompt, go to your working directory, and

execute the OE_large_cre_popul.bat file.

This .bat file executes the SQL code to create two new tables in the OE schema: ORDERS_L and ORDER_ITEMS_L

(done successfully)

Setting Up the Workload Generator

--------------------------------

1. Open SQL*Plus and run the following script to create the Workload Generator PL/SQL package and package body:

@build_workload_generator

(done successfully)

2/3/4 Right-click on your desktop, select New..., then select Shortcut to create a shortcut to the Workload Generator application.

5. Right-click on My Computer from your desktop and select Properties. Click the Environment tab. Add a user variable called ORACLE_SID and set it to OEMREP (this is the database I intend to test, also is the OMS repository database, on my notebook), then click Set and then OK.

Testing the Workload Generator

--------------------------------

Now that you have set up the Workload Generator, you should test it to make sure that it is running appropriately. To do so, perform the following steps:

1. Open the Workload Generator application from your desktop. Click OK to accept the defaults.

2. Click the Options tab.

3. In the Order Entry box, enter 1 for Number of Users, and oe_work for the Script. In the Shipping box, enter 1 for the Number of Users, and sh_work for the Script and click Save Options. Then select the Statistics tab.

4. Click Start to begin running the procedure to generate a workload. You will see that some workload is being generated.

5. To stop the Workload Generator, click the Reset button and then click Yes.

(YS: only the default option (1 OE user 1 SH usr) is OK)

Tuesday, July 04, 2006

Using EXPLAIN PLAN, SQL Trace and TKPROF

- access the efficiency of the SQL statements

- use these tools with EXPLAIN PLAN rather using EXPLAIN PLAN alone

TKPROF is be albe to

- format the contents of the trace file and place the output into a readable output file

- determine the execuaton plans of SQL statements

- create a SQL script that stores the statistics in the database

STEPS:

1. set initialization parameters

TIMED_STATISTICS

MAX_DUMP_FILE_SIZE

USER_DUMP_DEST

2. Enabling the SQL trace facility

- DBMS_SESSTION.SET_SQL_TRACE

- ALTER SESSION SET SQL_TRACE = TRUE;

3. Formatting Trace Files with TKPROF

TKPROF filename1 filename2 [ SORT = { option (option [option]...)} ]

[ PRINT = integer ] [ AGGREGATE = { YES NO } ]

[ INSERT = filename3 ]

[ SYS = { YES NO } ]

[ [ TABLE = schema.table ] EXPLAIN = user/password ]

[ RECORD = filename ]

-------------------

Using EXPLAIN PLAN

--------------------

- The EXPLAIN PLAN statement displays exercution plans chosen by the Oracle optimizer for SELECT, UPDATE, INSERT and DELETE statements.

- before issuing an EXPLAIN PLAN statement, you must have a table to hold its output

- use UTLXPLAN.SQL to create the PLAN_TABLE

SQL> @e:\oracle\ora92\rdbms\admin\utlxplan.sql

- Displaying PLAN TABLE output: (most recent plan table )

UTLXPLS.SQL - Shows plan table output for serial processing

UTLXPLP.SQL - Shows plan table output with parallel execution columns

Monday, July 03, 2006

Interview Question - SQL/SQL plus

1. How can variable be passed to a SQL routine?

ans: by use of & symbol(ampersands)

- e.g. "select * from dba_tables where owner=&owner_name"

- double ampersands tells SQLPLUS to resubstitute the value for each subsequent use of the variable

2. How to include a carriage return /linefeed in your output from a SQL script?

ans: using CHR(10) e.g. select 'hello' chr(10) 'world!' from user_tables;k

3. How can you call a PL/SQL procedure from SQL?

Level: Intermediate

Expected answer: By use of the EXECUTE (short form EXEC) command.

4. How do you execute a host operating system command from within SQL?

Level: Low

Expected answer: By use of the exclamation point "!" (in UNIX and some other OS) or the HOST (HO) command.

5. You want to use SQL to build SQL, what is this called and give an example

Level: Intermediate to high

Expected answer: This is called dynamic SQL. An example would be:

set lines 90 pages 0 termout off feedback off verify off

spool drop_all.sql

select ?drop user ?username? cascade;? from dba_users

where username not in ("SYS?,?SYSTEM?);

spool off

Essentially you are looking to see that they know to include a command (in this case DROP USER...CASCADE;) and that you need to concatenate using the ?? the values selected from the database.

6. What SQLPlus command is used to format output from a select?

Level: low

Expected answer: This is best done with the COLUMN command.

7. You want to group the following set of select returns, what can you group on?

Max(sum_of_cost), min(sum_of_cost), count(item_no), item_no

Level: Intermediate

Expected answer: The only column that can be grouped on is the "item_no" column, the rest have aggregate functions associated with them.

8. What special Oracle feature allows you to specify how the cost based system treats a SQL statement?

Level: Intermediate to high

Expected answer: The COST based system allows the use of HINTs to control the optimizer path selection. If they can give some example hints such as FIRST ROWS, ALL ROWS, USING INDEX, STAR, even better.

9. You want to determine the location of identical rows in a table before attempting to place a unique index on the table, how can this be done?

ANS: Oracle tables always have one guaranteed unique column, the rowid column. If you use a min/max function against your rowid and then select against the proposed primary key you can squeeze out the rowids of the duplicate rows pretty quick. For example:

select rowid from emp e

where e.rowid > (select min(x.rowid)

from emp x

where x.emp_no = e.emp_no);

10. What is a Cartesian product?

ANS: result of an unrestricted join of two or more tables. number of rows = #row of tab1 x #row of tab2 ...

11. You are joining a local and a remote table, the network manager complains about the traffic involved, how can you reduce the network traffic?,

Ans: push the processing of the remote data to the remote instance by using a view to pre-select the information for the join. This will result in only the data required for the join being sent across.

12. What is the default ordering of an ORDER BY clause in a SELECT statement?